MPI (Message Passing Interface) is a standardized message-passing library system with several implementations. On Elwetritsch we have installed three implementations, namely IntelMPI, Platform MPI and Open MPI. This chapter focusses on the later, but there should be no great difference among them. We start with a small example available at mpi_1. Next we have to load the MPI environment, compile and call totalview:

module add openmpi/latest

mpicc -O0 -g -o mpi1 mpi_1.c

totalview

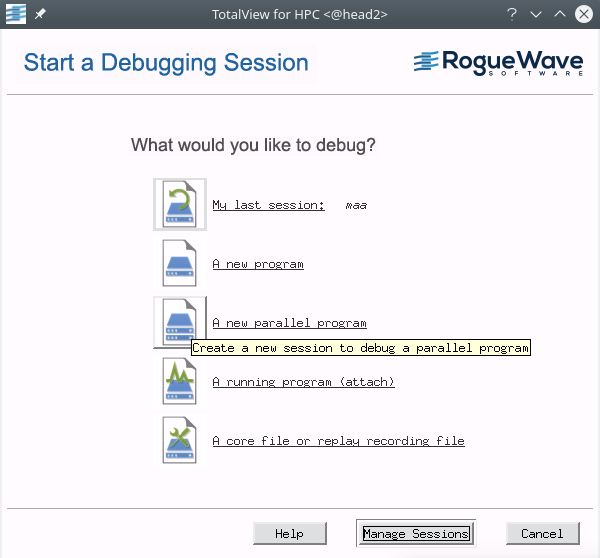

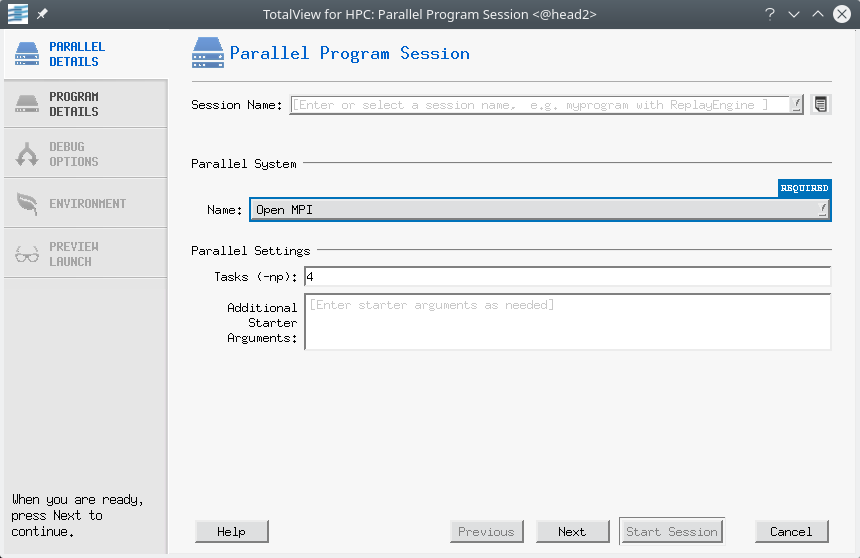

| In the starting window we select that we want to like to debug a new parallel program. In the popping up window for a parallel section we have to select the Parallel System - in our case openMPI and the number of Tasks (-np) we want to start. This program requires 4 tasks. You may specify a session name and continue. |

|

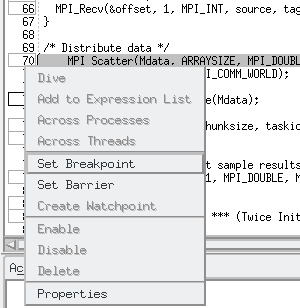

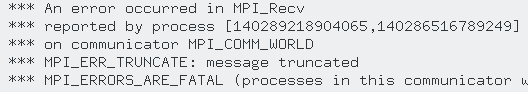

Running our program, we find immediately an error at one of the MPI

calls. So we are back in our source window (to the right) and set either

a breakpoint or a Barrier. I choose the later one.

|

|

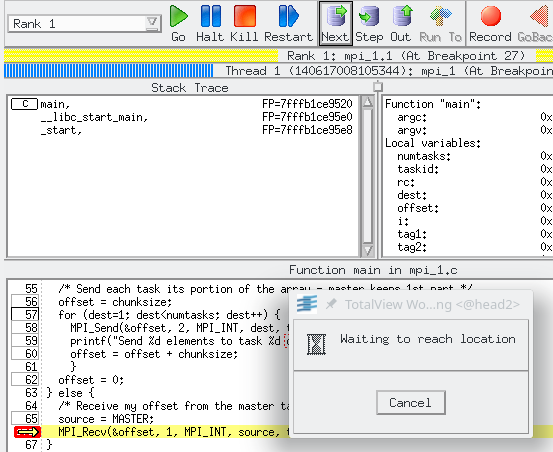

| The following is more or less arbitrary. Either you are bound to Rank 0, than the program will stop at the barrier (above) or you may be bound to a different rank, then you will see something like on the left. |

|

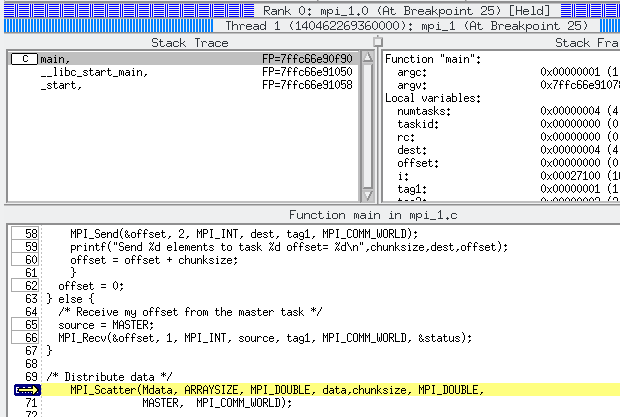

On Rank 0 you may select Threads in the Action Points

and Threads pane and see, that your program not only startet the

required 4 tasks but to each task are system threads

associated. In the toolbar you may select the process your are

interested and for example select on of the threads

and press Go. It will change to running, while the other threads remain in there state. You can select a different thread by clicking on it in the Threads pane or swith to a different task with the bottoms right of that pane.

|

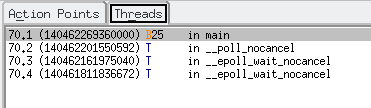

| Having selected task 2, we may click on Go and find it waiting in pthread_spin_lock and will unfortunately not return from it. |

| This function is intern called by MPI_Recv as we can see after changing to main in the Stack Trace. |

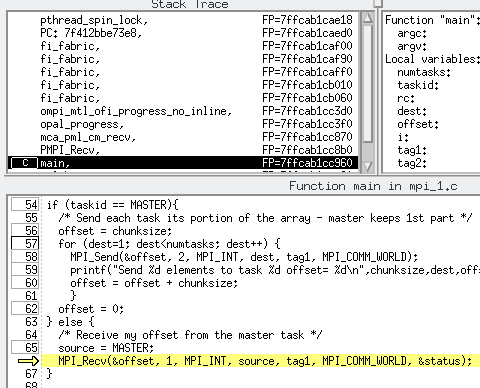

But we want to debug a program and set breakpoints below communitations. One way to overcome this problem is to modify the breakpoint a little. In my small program I want to stop at MPI_Scatter only process 0. The other processes will stop there as well, as they have to achieve data from process 0 in MPI_Scatter. But process 0 should not stop immediately, but wait some time to allow for completion of outstanding communications. We click right on the line and select Properties, change to Evaluate and send process 0 to sleep for 4 seconds.

Now that the MPI_Recv calls in processes 1-3 are

executed we face an error there. Thus we have to cope with another

previous hidden error.

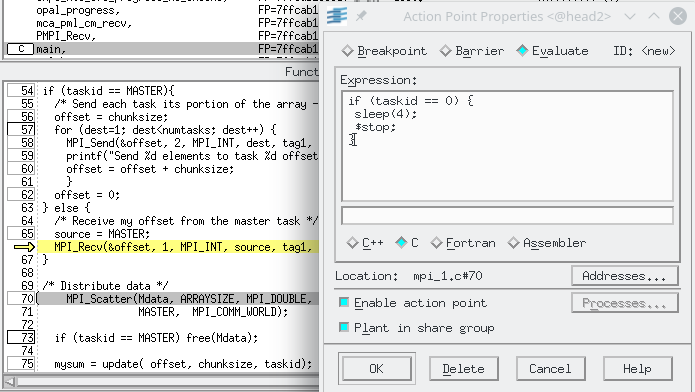

But introducing a breakpoint at MPI_Recv and trying to debug further does not help. We always get stuck at the following:

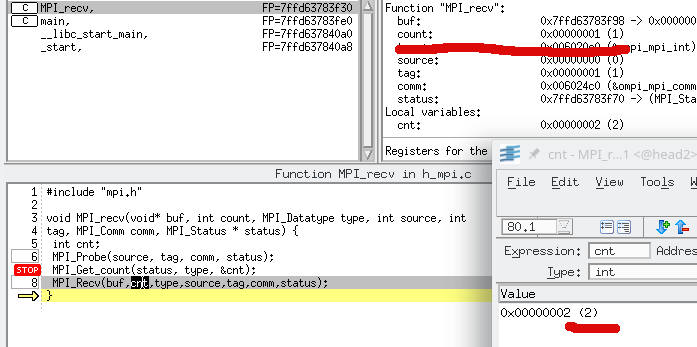

Now it is time to use h_mpi.c. It provides a wrapping alternative to MPI_Recv which allows us to have a closer look into what is going on. In mpi_1.c you should change MPI_Recv into MPI_recv as well and compile anew:

mpicc -O0 -g -o mpi1 mpi_1.c h_mpi.c

To set a break point directly in the new file we can open the corresponding source from the File sub-menu.

What I did in the helper file is to replace the MPI_Recv call and its complex functionality into 3 sub-steps. First I wait for the message (MPI_Probe). It completes without errors, so there is no error in receiving the message itself. Then I analyze the message contents with MPI_Get_count (there are additional analizing MPI functions), and finally I call the original MPI_Recv.

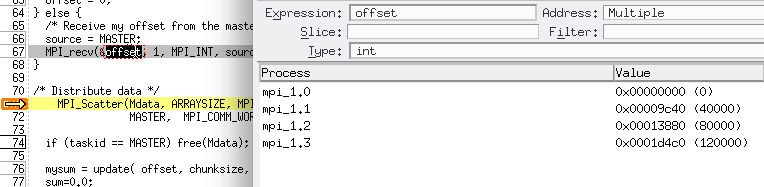

Behind MPI_Get_count I control the values to be passed to MPI_Recv and now I recognize, that the message sent contains cnt=2 integers but I only want to receive one. Stepping back to main reveals a small mistake in MPI_Send. Only one item should be sent and not two. This error is soon corrected and recompiled, a conditional breakpoint is set again in front of MPI_Scatter and there we are: The sent offsets are all correct. We can control it, by diving Across Processes:

|

Now we are back at the first mentioned error at MPI_Scatter.

Bad luck - it is still there.

We use the same trick and substitute it by our own function that avoids MPI_Scatter itself but replaces it by several MPI_Send, MPI_Recv pairs. By the way - the functions in h_mpi.c should only be used for debugging. They are much slower than the original code. |

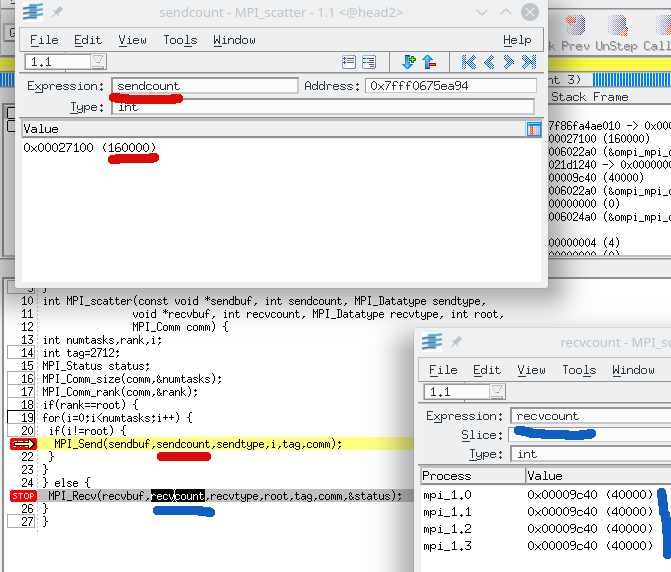

To locate the error we set breakpoints in front of MPI_Send and MPI_Recv and control the arguments passed. That way, we easily find out, that the amount of data sent to each process (sendcount) is the full array size but it should be the size of the chunk we want to scatter into. The recvcount values are correct and identical to all processes.

Thus replace in main the argument ARRAY_SIZE, which corresponds to sendcount) to chunksize and everything is fine. ->Top

Of course it hangs as well, but now we may select Halt in the toolbar and thus find the line where it is hanging. For both processes MPI_Recv seems to be the problem in lines 24 and 32. Now that we are experienced users, we immediately include h_mpi.c, change to MPI_recv, recompile, open its source and set a breakpoint in front of MPI_Probe. This will wait for a message with from anybody with any tag. We restart, select one of the processes, for example Rank 1, and click Next in the toolbar. It returns, that is, this process has received a message. Pressing Next again, we see even, that the length of the message (cnt)

Next we dive into the structure status of type MPI_Status. It provides additional information like the source and the tag of the message.

We control the information in status against the data we use for MPI_Recv. The source=0 is correct, but tag=1 is different from MPI_TAG=0. Ok. The sender uses tag=0 which mismatches tag=1 on the receiver. ->Top